Herbert Zhenghao Zhou | 周正浩

📍 New Haven, CT

📧 herbert.zhou@yale.edu

🌗 computational psycholinguist

Welcome! I am a fourth-year PhD student in the Department of Linguistics at Yale University. I am deeply grateful to be advised by Robert Frank and Tom McCoy. I am an active member in the CLAY Lab and the Language & Brain Lab. I graduated from Washington University in St. Louis in 2022 with a B.S. in Computer Science & Mathematics and PNP (a philosophy-centric cognitive science program). I grew up in Shanghai, China.

What is the shared basis of intelligence in natural and artificial systems? I approach this question through the language lens. My research interests are at the intersection of computational linguistics and psycholinguistics, with the goal of understanding the mechanisms of how humans and AI models incrementally represent and process human language, respectively. I am particularly interested in the algorithmic / mechanistic level interpretability: to what extent do AI models implement the processing mechanisms we find in humans, and to what extent can knowledge from AI models inform us about human cognition. I use methods from targeted behavioral evaluation, mechanistic interpretability, and human psycholinguistic experiments to study insights from the bidirectional interactions between cognitive science and AI/NLP. I am also interested in other cognitively plausible and interpretable models of sentence processing, in particular dynamical system models such as the Gradient Symbolic Computation framework and the Dynamic Field Theory. See more details in the Research tab.

Outside academia, I enjoy books 📖 and coffee ☕️ (you can always find me in cafés over the weekends), music 🎼 and museums 🏛️ (I sing in the Contour A Cappella group at Yale), biking 🚲 and hiking ⛰️ (but never professional, as I enjoy the casual flow).

news

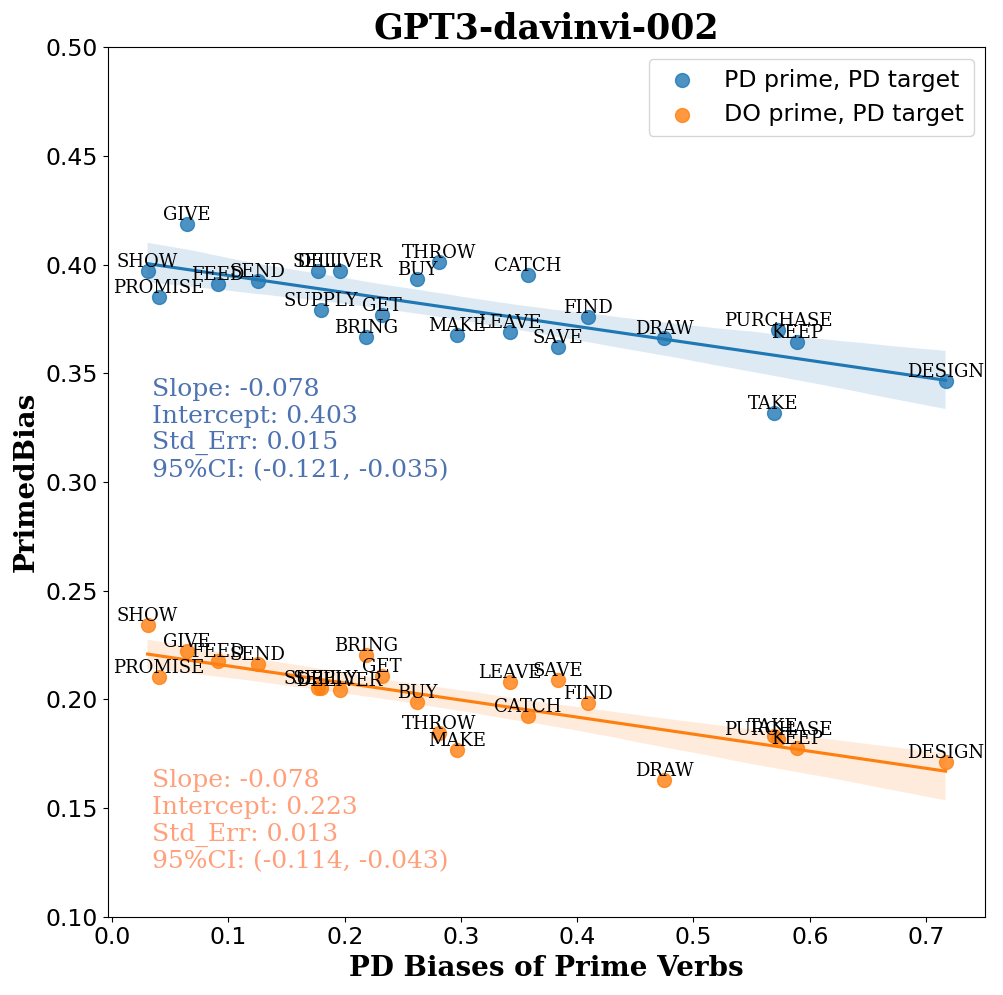

| Dec 05, 2025 | I am attending the CogInterp workshop🌗 in San Diego! Here is my poster on causal intervention on the continuous verb biases in LLMs. Check it out 😀 |

|---|---|

| Oct 09, 2025 | I gave a guest lecture 🧑🏫 on Neural Networks in class Language and Computation I! See Teaching tab for slides 📝. |

| Oct 03, 2025 | I defended my disseratation prospectus and am officially ABD 🎉! |

| Sep 23, 2025 | Two short papers accepted to CogInterp 🌗! One with Paul Smolensky et al. is selected for oral presentation. See you in San Diego early December! |

| Jun 12, 2025 | I will go to LSA Summer Institue again! So much precious memory and friendship gained in 2023 @ UMass Amherst, and excited to meet some old and new friends in Eugene in July 💫! |

selected publications

-

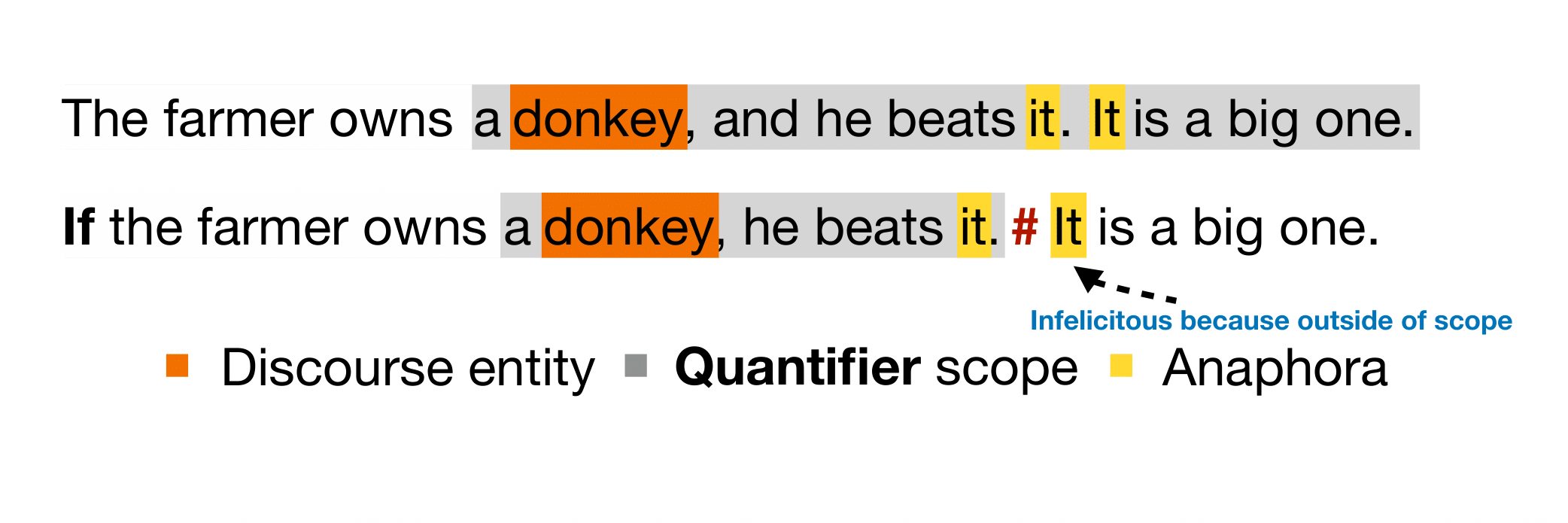

Meaning Beyond Truth Conditions: Evaluating Discourse Level Understanding via Anaphora AccessibilityIn Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 8824–8842, Vienna, Austria. Association for Computational Linguistics., Jul 2025

Meaning Beyond Truth Conditions: Evaluating Discourse Level Understanding via Anaphora AccessibilityIn Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 8824–8842, Vienna, Austria. Association for Computational Linguistics., Jul 2025 -

Is In-Context Learning a Type of Error-Driven Learning? Evidence from the Inverse Frequency Effect in Structural PrimingIn Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Apr 2025

Is In-Context Learning a Type of Error-Driven Learning? Evidence from the Inverse Frequency Effect in Structural PrimingIn Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Apr 2025